![]()

(Alex)Dirk Freyling independent researcher & artist

Knowledge is a privilege. Sharing is a duty.

Wissen ist ein Privileg. Die Weitergabe eine Pflicht.

|

[Technical note: In case this web page is unintentionally without a web page menu ("frame-less"), here is the complete elementary body theory web page]. The entire website kinkynature.com is divided "roughly" into two sections and is designed as an information site. Due to the abundance of information and the necessary "comprehension-promoting" readability as well as the aesthetics of the illustrations, a smartphone is not suitable for viewing. The extensive website menu of the science section - on the left and above the selected topic page(s) - requires space. The first choice is a computer monitor. A tablet can be used with some restrictions.

The English {Elementarkörpertheorie-Version EKT (elementary body theory EBT)} version has been in the works for a long time, but stil has unfortunately only been realised in fragments to date...

The contents and model results regarding the phenomenology, derivation and calculation of concrete quantities, such as the proton radius, anomalous magnetic moments, the derivation of Sommerfeld's fine structure constant, the mass-space couplings that form matter, etc., see selection menu (German) of the ‘frame page’, have been worked out for years. "NEWS"

A ‘didactically motivated modernisation’ for

the purpose of comprehensibility is being considered. To this end, I

have put a more interdisciplinary test page online in parallel,

which ‘works’ in dialogue with test readers for better

comprehensibility. See

An overdue rendezvous with causal rationality ... |

What is it all about?

A sustainable paradigm shift.

What is meant here by paradigm shift?

The replacement of the standard (thought) models [SM ΛCDM] of theoretical physics.

[SM] Standard Model of (Elementary) Particle Physics (SM)

[ΛCDM] Standard Cosmological Model (ΛCDM model)

What takes their place?

|

A much clearer, more effective, interdisciplinary, mathematically simpler model, which describes the micro- and macro-cosmos in a standardised, cross-scale and predictive way. |

|

ELEMENTARY BODY THEORY by Dirk Freyling 1986 - today [EBT] The »Elementary Body Theory (EBT)« deals in particular with answers to the question of how mass and space are linked to each other in a fundamental way and how their ‘interplay’ leads to understandable formations of matter that can be calculated formally and analytically - both microscopically and correspondingly macroscopically. Neither a variable time, nor mathematical space-time constructs, nor any form of sub-structuring are demonstrably necessary for a clear understanding and the resulting equations based on the phenomenologically founded elementary body theory. Less is more... Contrary to the statement of standard physics which postulates four fundamental forces, elementary (scale-corresponding) mass-space-coupling - reduces any interaction to the mass-to-radius ratio. This leads to constructive, “easy to understand” objects which can be expressed either by its radii or its reciprocally proportional masses.

|

[ Elementary Body Theory ]

|

I don't like supervised thinking. The extent to which one or the other statement from my fellow human beings corresponds to my ideas is not a criterion for whether I present it. I am a passionate advocate of aphoristic "language images". By the way: “The language of good science is bad English.“ This is what one of the most renowned englicist in Germany, Ekkehard König, ironically says. "Words made here", partly in the form of satire, irony and cynicism, are an expression of indignation and incomprehension about the questionable, established arbitrariness of today's theoretical models for describing matter. A more precise formulation on my part is often possible, but is then not understood by the majority of readers, due to the strongly mathematised, formal content, since the detailed knowledge that is technically necessary can usually only be understood by those who do not want to hear or read it, since it demonstrably leads the represented analysed standard models ad absurdum.

Preface Nature can only add or subtract. A “secured” »higher mathematical reality« exists exclusively within the framework of axiomatically based language (mathematics). To what extent a correct mathematical structure (»higher mathematical reality«) is physically applicable cannot be decided with the “means” of mathematics (see “indisputably exemplary” epicyclic theory and Banach-Tarski paradox). Mathematics ultimately captures quantities and cannot distinguish between vacuum cleaner ("dust cleaner") and dust (Staubsauger und Staub). If Euclid (...probably lived in the 3rd century B.C.) was still looking for plausible intuition for mathematical foundations and thus made an interdisciplinary connection that could be evaluated as right or wrong, in modern mathematics the question of right or wrong does not arise. Euclid's definitions are explicit, referring to extra-mathematical objects of "pure contemplation" such as points, lines, and surfaces. "A point is what has no width. A line is length without width. A surface is what has only length and width." When David Hilbert (1862 - 1943) axiomatized geometry again in the 20th century, he used only implicit definitions. The objects of geometry were still called "points" and "straight lines" but they were merely elements of not further explicated sets. Hilbert stated that instead of points and straight lines one could always speak of tables and chairs without disturbing the purely logical relationship between these objects. But to what extent axiomatically based abstractions do couple to real physical objects is another matter altogether. Mathematics does not create (new) phenomenology, even if theoretical physicists like to believe this within the framework of the standard models of cosmology and particle physics.

A historical review shows that people who draw attention to mistakes have to reckon with the fact that they will not be believed for decades, while those who publish spectacular “air numbers” within the framework of existing thought models are honored and courted. Critics are perceived by the masses as disagreeable interferers. In the words of Gustave le Bon, “The masses have never thirsted for truth. They turn away from the facts which they dislike and prefer to idolize error when it is able to seduce them. Whoever knows how to deceive them easily becomes their master, whoever tries to enlighten them always becomes their victim. " Whoever strives to belong to a group does not become a critical thinker. Logical and methodical analytical skills, knowledge of historical relationships, self-developed basic knowledge instead of literature reproduced, ambition, self-discipline and a good portion of egocentrism are basic requirements for independent thinking and acting. Theory claim and empirical findings An experiment needs a specific question to be conceived. If the question is the result of a mathematical formalism, the result of the experiment is correspondingly loaded with theory. If the measurable results are then preselected and only indirectly "connected" to the postulated theory objects, as is usual within the standard models, there is nothing to counter the arbitrary interpretation. Thought models must be absolutely conceptually transformable in order to acquire an epistemological meaning. A mathematical equation that cannot be conveyed outside is always an »epistemological zero« in the context of a physical thought model.

Less is more...

Contrary to the statement of standard physics which postulates four fundamental forces, elementary (scale-corresponding) mass-space-coupling - reduces any interaction to the mass-to-radius ratio. This leads to constructive, “easy to understand” objects which can be expressed either by its radii or its reciprocally proportional masses. Remember: Divergence problems are theory based. The internal structure of the energy sources are simply not “captured”. Taking into account the finite, real-physical oriented, phenomenological nature of objects, the "infinities" resolve plausibly.

[ Elementary Body Theory ] The self-evident fact that the distance on a spherical surface does not correspond to the "straight" distance between points A and B requires no abstraction.

This leads to the

|

|

"abstract" The Elementary body theory

(Elementarkörpertheorie [EKT] ) is based on

plausibility and minimalism and provides phenomenologically based

equations without free parameters and a formalism which leads to results

which are in good to very good agreement with experimental measured

values. For a clear understanding and as a result of the

phenomenologically based elementary particle theory generated equations,

neither a variable time, nor mathematical space-time constructs, nor any

form of substructuring are necessary.

The time-dependent elementary body equations are

derived from the observed invariance of the (vacuum) speed of light. The

fundamental difference to the (special) theory of relativity

respectively to the Lorentz transformation is the radially

symmetric-dynamic character of these equations. The main object of the elementary body theory is the elementary body originally a pulsating hollow sphere. At maximum expansion the hollow spherical shell-mass is at rest. The equations of motion - based on a sine function - describe the complete transformation of motion energy without rest mass (photon) to mass.

The basic mass-space-model requires that the equations

portray both the massless photon and mass. The equations r (t) = r0 ·

sin (c · t / r0) and m (t) = m0 · sin (c · t /

r0) do exactly that. The

timeless speed of light - as a state of pure motion - is not

contradictory with the matter-energy-embodiment.

The transformation from a photon to a mass-radius-coupled space does not correspond phenomenologically to a partial oscillation, as was initially assumed (also) within the framework of the elementary-body-model. The matter-forming transformation of a photon corresponds to an irreversible »state change«.

Time reversal, as required "mechanistically" from classical physics to quantum mechanics, is in general contradictory to measuring reality (thermodynamic processes). The fully developed elementary body (r (t) = r0, m (t) = m0) can not regain the state of the photon by itself.

The

time-dependent mass formation is coupled to the time-dependent radius

magnification r = r (v (t)). In simple words, the initial, pure motion

energy gives rise to time-dependent spherical surfaces, which as such span

a space whose reciprocal size is a measure of the equivalent mass.

After a quarter period (½ ·

π), the

elementary body is fully developed (r (t) = r0, m (t) = m0), meaning that

the expansion velocity v (t) is zero. Since the process of resting-mass reduction corresponds to an inversion of the relativistic dynamics of a velocity-dependent momentum mass, the internal dynamics for energy conservation of the elementary body is suggestively called momentum-mass inversion.

State

as information = photon t = 0 , the entire energy is available as pure information, mass- and spaceless

Information

as a material condition = elementary body t

(½π) , the total energy is "present as" mass m0

with radius r0

Phenomenologically, the transformation of motion information into spatial information is complete. Without external interaction the elementary body remains in this state. If the elementary body is "excited" from the outside, different interaction scenarios occur which, depending on the energy of the interaction partners, lead to partial annihilation or (full) annihilation. Matter-forming partial annihilations are formed in the simplest form by the proton-electron interaction (keywords: Rydberg energy, hydrogen spectrum). Mass-coupled space annihilates according to r (t) and m (t). "Radiation" is absorbed or emitted. The interaction reversibility that is possible must be via excitation from the outside. This could be the interaction with other elementary bodies, photons or "embodied fields", which can always be understood as elementary body (states). As mentioned in the context of the derivation of the mass-energy equivalence E = mc², the basic misunderstanding ("outside" the elementary-body theory) is that the properties of an interacting photon are projected onto the "resting state" of the photon. However, according to equation [P2.3] and its temporal derivative [P2.3b], as well as [P2m], the »resting state« of the photon is the space- and massless, "light-fast" (energy) state of maximum motion. This means that an information is propagated that "unfolds" only upon absorption (interaction) of the photon in accordance with equations [P2.3], [P2m] and their derivatives, and then the time-dependent phenomena of interference and (mass-based) collision shows. In regard to photons in interstellar space, the light path and thus the photon is invisible. Only when an interaction (absorption) "appears", the photon becomes visible (detectable).

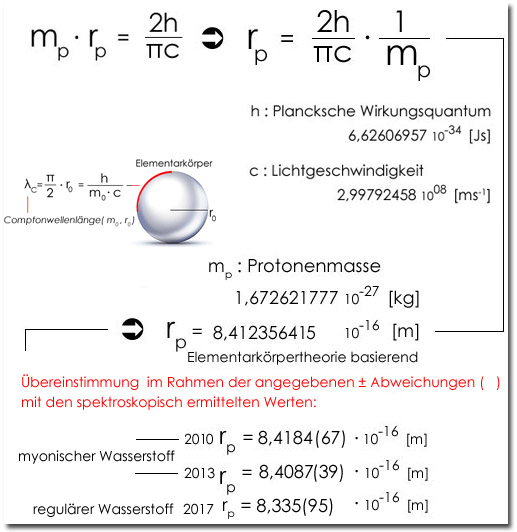

Exact calculation of the Proton radius

A consideration of the elementary

body provides an accurate theoretical value

for the proton radius. Elementary body theory based the proton radius is

the

This result is in excellent accordance with the measured value of the proton radius (investigation muonic hydrogen, July 2010 and 2012/2013 at the Paul Scherrer Institute in Switzerland. |

"abstract"

[ http://www.psi.ch/media/proton-size-puzzle-reinforced ]

|

All cosmological "observational studies" are not controllable laboratory experiments. The postulated theoretical implications strongly influence the experimental interpretations. The human observation period is extremely small compared to the periods of time in which cosmic movements took place and played out. To substantiate assumptions with the data from the human observation period is "far-fetched" to put it casually. All current supposedly empirical measurements are (big bang) theory laden. Postulated time spans, distances, and energy densities are subjective-theoretic. The entire present physical view is based on the paradigm of "physical space-time". Already Isaac Newton thought that the idea that gravitation could work through empty space was absurd. It is - superordinate and considered as a whole - anything but trivial to regard space and time as physical "objects". Space and time are primarily "order patterns of the mind". In order to "preserve" physics from these mind-patterns, a phenomenological examination and explanation are absolutely necessary. By the way...The earth does not move around the sun. As Isaac Newton already stated in his Principa 1687, the sun and planets move around the centre of mass of the solar system, called the barycentre. “Remember”: Spacetime is not measurable. The postulate states that within the framework of the (formalised) consideration of the theory of relativity, time and space only exist as a unity. One consequence: we cannot record events in the postulated general relativistic cosmos as a „snapshot” in time, because time does not exist in space-time. According to the theory of general relativity (GR), there is only spacetime and spacetime cannot be experienced by the senses or by measurement. By the way...Popular scientific explanations of the General Theory of Relativity (GR) are all inadmissible interpretations, because the complexity necessary for the understanding is not considered. This is as if somebody presents Chinese characters to an audience not competent in writing for viewing and discussion. Then everything and nothing can be interpreted into it, since nobody has the conditions for the decoding as well as nobody the ability of creating Chinese characters. To formulate it in a bold way: In GR, also writing errors lead to new solutions and this is (already) true for people who do differential geometry professionally. Despite the impossibility of depicting various mathematically generated theoretical objects and interaction scenarios in a generally understandable and plausible way, it is didactically widespread to convey knowledge with "simplified" (unfortunately wrong) views. An example is the balloon inflated with markings (which are supposed to represent planets, stars, galaxies), which is supposed to depict the cosmic expansion after the Big Bang. This creates a sense of vivid understanding in the viewer. Only this balloon analogy is fundamentally wrong. Because… space or, in simplified form, a balloon surface that expands, but the mathematical construct of space-time.

iii) According to today's ΛCDM-model ideas the expansion is accelerated. In the "balloon understanding", the radius expands uniformly or at a slower rate (up to a material-related maximum before the balloon bursts). iv) Also concealed are the (faster-than-light, strongly limited in time) postulated, theory-dependent inflation-time-period as well as the assumptions and effects of the postulated dark matter and dark energy, which do not occur in the balloon model. And overall the arbitrariness problem of the free parameters is compounded by the unavoidable "axiomatic violation" of the covariance principle. The general theory of relativity was born, among other things, out of the demand to be able to use any coordinate system to describe the laws of nature. According to the covariance principle, the form of the laws of nature should not depend decisively on the choice of the special coordinate system. This requirement is causally mathematical and leads to a variety of possible coordinate systems [metrics]. The systems of equations (Einstein, Friedmann) of the General Theory of Relativity, which form the basis of the statements of the Standard Model of Cosmology, do not provide analytical solutions. According to GR postulate, not only mass but also every form of energy contributes to the curvature of space-time. This applies including the energy associated with gravity itself. Therefore, Einstein's field equations are non-linear. But only idealisations and approximations lead to computable solutions to a limited extent. The unavoidable ("covariant") contradictions come with the obviously inadmissible idealisations and approximations of the system of nonlinear, chained differential equations. Mathematically, the covariance principle cannot be "violated" because it is axiomatically justified. Only this axiomatic premise "disappears" with the mutilation" (idealisations and approximations) of the actual equations. In other words, the mathematically correct equations have no analytical solutions. The reduced equations (approximations, idealisation) do have solutions, but these are not covariant. Thus, no solution has a real-physics-based meaning. This way of using mathematics is arbitrary, since different results are obtained depending on the "taste" of the (self-)chosen metric. The 'big bang problem' as a 'primordial singularity' only occurs theoretically if Einstein's credo (: irreversibility is an illusion) is true. This conclusion means, however, that thermodynamics is consistently negated from the outset and one restricts oneself to the flawless Hamiltonian mechanics as the basis of GR, or at least only isentropic processes (no entropy change) are considered in order to at least 'save' the phenomenon of background radiation...

The gravitational constant γG used in the "known" Newton's law of gravitation refers to the "length-smallest" body G {elementary quantum}. This is not obvious since the "normal formulated" law of gravity does not explicitly disclose this original connection.

The secret of very weak gravitation in relation to the electrical interaction and strong interaction is based on the false assumption that there is generally a mass decoupled space. If one considers the space that the macroscopic body spans both by its object expansion and by its radius of interaction, then it becomes clear that the "missing" energy is (in) the space itself. In this sense, the gravitational constant γG is the "measure of things" for macroscopic bodies.

Macroscopic

bodies and gravity For bodies with radius-mass ratios different from rG / mG, this means „colloquially simply” that "work" had to be done to span a larger (body) space than is naturally-coded in the smallest, most massive elementary quantum {G}. Taking energy conservation into account, this energy can come only from the mass-dependent resting energy. In the mass-dependent interaction of gravitation, only the mass fraction (effective mass), which is available after deduction of the mass of equivalent space energy, is then carried.

Well-known macroscopic objects (... billiard ball,

football, earth, sun, ...) obviously do not satisfy the mass-radius-constant

equation [F1]. Its real size is larger by many orders of magnitude (even

before the interaction) than Equation [F1] demands mass-radius-coupled

for elementary bodies.

In addition, the ratio (RO / MO) is many orders of magnitude greater than the governing elementary quantum. Without knowing the concrete nature of many-body interleaving, it can be generally understood that the [space] energy of the gravitational interaction, which seems to be missing in relation to the rest energy, lies in the real physical object expansion, which is determined by the object radius r0 and by the Interaction distance r (interaction radius).

From this follows the effective interaction mass Meff = (rG / r) · mx, which is indirectly expressed by the gravitational constant in Newton's law of gravitation. The gravitational self-energy equivalent to the effective interaction mass can be derived (also) from the radius-mass-coupled energy of the elementary body (compare equation [E1r]), taking into account the "scaling" using rG / RO.

It follows from these simple plausibility

considerations that gravitation, on the basis of the phenomenology of

an energy-conserving, mass-radius-coupled space, can be formally

analytically determined within the framework of „simplest”

mathematics. The inner spatial composition and interleaving of the

atomic or molecular structure of the macroscopic many-body objects has

no influence on the gravitational force or gravitational energy as

long as the interaction radius r is greater than the object radius RO

(r > RO = "elastic interaction").

Self-similarity The assumption is that the ratio of time-dependent universe radius to time-dependent universe mass is time-independent(!). The multiplication of (rUni / mUni) with c² is equal to the gravitational constant γG. These assumptions include a "beautiful" correspondence between cosmos, gravitational constant and the elementary quantum {: G with rG, mG} respectively the Planck sizes for mass (mPl = ½ · mG) and length rPL (radius, mPl= ½ · rG). These assumptions lead to concrete calculations, such as the maximum universe mass, the maximum universe radius and with the help of the hydrogen parameters (electron, proton mass and ground state energy) to the calculation of the background radiation temperature.

elementary body based universe (more) details, phenomenology, ... see here

Hydrogen

is by far the most abundant matter of the universe. Hydrogen

accounts for approximately 90% of interstellar matter. As shown, the

omnipresent hydrogen in the universe is the "source" of

3K

background radiation. For reasons of consistency, the Rydberg energy value results stringently from the elementary-body theory-based proton-electron interaction. This ensures that all the equations used can be linked together without any approximation. The deviation from the spectroscopically measured Rydberg energy (ERyexperimental) is: ERyEB-theory/ERyexperimental ≈ 1.0000025.

|

|

Conclusion: The above basic analysis of gravity disenchanted various myths (keywords: graviton, inflation field, dark energy, dark matter, ...) to form a universe. Neither "ART-standard" differential-geometric considerations, super-light velocity or four-dimensional space-time constructions are necessary. Furthermore, the gravitational interaction described here can not be phenomenologically "unified" with quantum field theory (QFT), since there is no need to do so. The postulated "exchange particle objects" of the Standard Model of Particle Physics (SM), ie gluons and vector bosons and, in the broadest sense, neutrinos, do not "couple" to gravity even if these "force mediators" would exist. Without explicitly stating this, however, there are neither gluons, vector bosons nor neutrinos (see the chapters Standard Model, Neutrinos and the remarks (further down) about the Higgs-Boson and SM-fantasies). |

Furthermore:

Concept

of electric charge

|

|

|

|

Electric charge is a secondary term/concept of standard phycics that suggests a "phenomenological entity" that is uncoupled from the mass (and the radius) of the charge carrier.

Based

on elementary-body theory all charge interactions are clearly

traceable to mass-radius couplings. Conveniently, electrical

charges in the elementary-body model occur only as an implicit function

of the Sommerfeld fine-structure constant α as a

(formal) result of the mass-radius coupling. "Keys" for understanding the formation of matter are the phenomenologically founded charge possibilities. First, the energetically (strong) elementary body charge q0 (which energetically equals m0) and the elementary electric charge e.

f7 was "introduced" to show that the [elementary body] charge q0 is ("only") a scaled mass-radius function.

Side

note Particle physicists generally use the phenomenologically incorrect term decay even though they mean transformation. Decay would mean that the decay products were (all) components of the original particle. But that is not the case, at least not within the theoretical implications and postulates of the Standard Model of particle physics (SM).

Charge-dependent matter formations

Basics The extended charge principle leads beyond elementary-body theory-based hydrogen atom-forming to additional proton-electron interactions. From the generalized, clear phenomenological process stringently follow the neutron (e-q0 interaction) and pions (q0-q0 interaction) as energetically possible (time-instable) "particles". Without concretizing this here, the charged pions “decay” (convert) into muon and anti-muon and then into electron and positron. Overall, "diverse elementary particles" can be formed in the context of the extended charge concept in "formal analogy". Noteworthy is the fact that this formalism provides simple, without free parameters solutions that are in good agreement with the (energy and mass) values of the "formed particles". On the basis of the reduced mass of the electron it can be easily shown how a model view "works wrongly". Considering celestial mechanics, a "small" centroid shift results from the proton to the electron, since the mass of the proton is finite. From the point of view of two equal charges, this assumption is unfounded, since masses in the standard view of physics only have an effect via the (misunderstood) gravitation, which is smaller by almost forty powers of ten to the electrical force. Overall, in the world view of the prevailing physics, a mass can not interact with a charge, because there exists simply no such phenomenology. It is astonishing how this fact was ignored mass psychologically over generations and is still ignored.

... there is no mass-center-shift "between two equal I charges I

BUT : The model view that the interaction between "charges" that are at a distance r from each other does not occur in matter-forming elementary-body theory. In elementary-body theory, the "charges" overlap with/in a common origin.

Reduced

mass alternative in a mass-space-coupled model Let's start with the superposition of two elementary bodies A and B, with the masses mA and mB and the mass-coupled radii rA and rB. By mass-radius-constant equation [F1] there is no "room" for interpretations ► The result applies to all charge carrier constellations (... A-B, proton-electron, proton-muon, ...)

Here one can clearly see that the alleged center-of-gravity correction of the "celestial-mechanical model has nothing to do with (for example proton and electron) with focus on two interacting charges (at a distance r), since electron and proton, as equal charges, can not undergo a shift, either phenomenologically or computationally. NOTICE! Equating an electrical centripetal force with a (only) mass-dependent centrifugal force is phenomenologically unfounded in the context of physics and is reminiscent of the epizykel theory. The expression for the resulting mass m(rA + rB) in equation [MAB] is mathematically identical to the celestial-mechanical centroid correction of two macroscopic masses (reduced mass) which computationally interact elastically as point masses, but the phenomenology for the equation [MAB] is a completely different one. Furthermore,

the calculation of ground state energies is neither

quantum mechanically nor quantum electrodynamically possible. Since a

significant amount is determined by the ratio of the interacting

masses. There is neither QM nor QED based the possibility of

introducing the reduced mass mred = mA / (1 +

mA / mB)

quantum-field-phenomenologically. The reduced mass - whether one wants

it to be true or not - is historically derived from "Newtonian

celestial mechanics" within the framework of standard physics.

This means in plain language that in terms of atomic interactions,

these are neither QM nor QED justified. QM and QED are "epicyclic".

Charge-dependent matter formation possibilities The charge-dependent matter formation generally describes the A-B interaction possibilities. A and B are elementary bodies with the masses mA and mB and the reciprocal proportional radii rA and rB. The following applies: mA · rA = mB · rB = FEK = 2h / πc [F1]. The phenomenologically founded formalism leads to the equations:

In the above (matter-forming) α-function-equations, only the masses mA and mB of the interacting elementary bodies occur as variables. The charge as such, or more precisely the charge size, is implicitly determined by the functional relationship of the Sommerfeld fine structure constant α. (Details and derivations see »Ladungsabhängige Materiebildungen«)

e-e interaction

The term e-e

interaction means that two elementary charge carriers interact.

The most prominent example of this type of

interaction is the proton-electron-based hydrogen

atom.

mA = me = 9,10938356e-31 kg : electron-mass mB = mp = 1,672621898e-27 kg : proton-mass c = 2,99792458e+08 m/s α = 0,0072973525664

e-q0 interaction elementary body carrier A(q0) interacts with elementary carrier B(e). The most prominent example of this type of interaction is the proton-electron-based neutron. The neutron mass mn arises from a matter-forming charge interaction of the electron and proton and can be understood and calculated by the interaction of the elementary body charge q0 for the electron and the elementary electric charge e for the proton.

me = 9,10938356e-31 kg me(q0) = (4/α) · me = 4,99325391071e-28 kg c = 2,99792458e+08 m/s mp = 1,672621898e-27 kg ∆m = 1,405600680072e-30 kg ∆Eee = 1,263290890450e-13 J ~ 0,78848416 MeV Taking into account the phenomenologically-based, approximation-free approach, in formal-analytic form of the equation: mn = mp + me + Δm [mq0e], the "theoretical" result of elementary particle theory based neutron mass calculation (according to charge-dependent proton-electron interaction) is “sensational”. In addition you'll find below a calculation of the magnetic moment of the neutron (see SUMMARY of FORMULAS).

q0-q0 interaction Charge carriers A and B interact via the elementary body charge q0. The most prominent example of this type of interaction is the proton-electron-based charged pion.

mA = me = 9,10938356e-31 kg : electron-mass q0mA = (4/α) · me = 4,99325391071e-28 kg mB = mp = 1,672621898e-27 kg : proton-mass q0mB = (4/α) · mp = 9,16837651891e-25 kg c = 2,99792458e+08 m/s α = 0,0072973525664

∆m = 4,99053598e-28 kg (∆m/2) / mπ(exp) ~ 1,00289525 ... ∆m means the mass of two charged pions (matter creation)

The extent to which experimental particle physics can accurately determine resting pion masses is highly doubted. The neutral pion is a "pion" due to the different mass of the charged pions only in the SM requirement. The abstraction, which is "equal" to particles with different masses according to postulated QM superpositions (keyword: quarkonia), is one of the many arbitrariness hypotheses within SM (see SM-quark mass uncertainty in the percent error range) and "outside" of mathematical formalism of the SM unfounded.

For mass-like interaction partners (for example, proton-antiproton or electron-positron) the general α-function equations simplify - for example the q0-q0 interaction - to the equations ([Eq0q0] and [mq0q0]):

"Surprising" is the "circumstance"

that in the context of "charge-dependent matter formation the

strong proton-antiproton interaction follows a matter-formation energy

of ~ 257 GeV depending on the (anti) proton mass and the Sommerfeld

fine-structure constant

α which, according to charge conservation, produces as a

variation possibility two mass-radius-coupled "small mass heaps"

(Masse-Häufchen) which map uncharged and charged Higgs-boson masses.

α = 0,0072973525664

∆E( p+, p- ) = 257,15410429801 GeV ∆m( p+, p- ) = 4,584188259456e-25 [kg] [2q0q0] (∆m( p+, p- ) / 2) = 128,57705215 GeV/c² mH(0) ~ 2,228e-25 kg ~ 125 GeV/c² (∆m( p+, p- ) / 2) / mH(0) ~ 1,02861642 This means that with an "error" ~ 2.9%, based on the Higgs boson mass "detected" at the LHC (mH(0) ~ 125 GeV / c²), elementary particle theory predicts an event which exists in the standard model of Particle physics (SM) only as a theoretically predetermined methodical circular conclusion.

by the way ...

There are aspects of the Higgs-Boson-mass predictions which are barely known.

David and Sidney

Kahana's predictions about the Higgs-Boson-mass and the

Top-Quark-mass (1993!!!) in a “parameter free fashion” are very

precise. Source: https://arxiv.org/pdf/hep-ph/9312316.pdf

According to the

standard model (SM) predictions are not possible. How do you explain the

obvious discrepancy?

Peter Higgs knew

about their work … he said, “You’re from Brookhaven,

right. Make sure to tell Sid Kahana that he was right about the top

quark 175 GeV and the Higgs boson 125 GeV” [Kahana and Kahana 1993].”… Source:https://arxiv.org/pdf/1608.06934.pdf

One would assume

that highly accurate calculations about the Top-quark-mass and the

Higgs-mass are remarkable. Why didn’t the “Kahanas”

get the “proper” attention? Why is there no adequate mention about

these theoretical achievements?

I strongly believe

that

For further reading

see https://arxiv.org/pdf/1112.2794.pdf

... "predictions by

the authors D. E.

Kahana and S. H. Kahana ,

mH = 125

GeV/c² uses dynamical symmetry breaking with the Higgs being a deeply

bound state of two top quarks. At the same time (1993) this model

predicted two years prior to the discovery to the top its mass to be mt =

175 GeV/c²..." Notice! There is just one outstanding »prediction paper« (1993 https://arxiv.org/pdf/hep-ph/9312316.pdf) which leads to the Higgs-Boson-mass and the Top-Quark-mass with the same theoretical approach prior to the experimental confirmation in 1995 (Top) and 2012 (Higgs).

The uncharged pion ... a pion matter possibility from q0 - q0 interaction

q0mA = (4/α) · me = 4,99325391071e-28 kg

∆E( e+, e- ) = 140,05050232093 MeV [E2q0q0] ∆m( e+, e- ) = 2,496626955355e-28 kg [2q0q0] 2,4061764315e-28 kg mπ0 SM - theory laden ∆m( e+, e- ) / mπ0 ~ 1,037591

SM linguistic usage… Particle physicists generally use the phenomenologically wrong term decay although they mean transformations. Decay would mean that the decay products were (all) components of the decaying. But this is not the case, at least not within the framework of the theoretical implications and postulates of the Standard Model of particle physics.

Wishful thinking and

reality I find it quite amusing and right to the point how Claes Johnson, a Professor of Applied Mathematics, classifies ... Claes Johnson about QM and SRT Concerning the crisis of modern physics it is commonly accepted that one reason is that the two basic building blocks, relativity theory and quantum mechanics, are contradictory/incompatile. But two theories which are physical cannot be contradictory, because physics which exists cannot be contradictory. But unphysical theories may well be contradictory, as ghosts can have contradictory qualities.

The

Special Theory of Relativity of Einstein is unphysical because the

Lorentz transformation is not a transformation between physical

coordinates, as strongly underlined by its inventor Lorentz, but

misunderstood by the patent clerk Einstein believing that the

transformed time is real and thus that time is relative. Quantum

Mechanics is unphysical because its interpretation is statistical

which makes it non-physical, because physics is not an insurance

company. Here Einstein was right understanding that God does not play

dice. Professor of Applied Mathematics, Royal Institute of Technology (KTH) Stockholm , Sweden ______________________________________________

Albert Einstein

It could be helpful to remember what Albert Einstein

wrote on quantum mechanics: [1] "The ψ function is

to be understood as a description not of a single system but of a system

community [Systemgemeinschaft]. Expressed in raw terms, this is the result:

In the statistical interpretation, there is no complete description of the

individual system. Cautiously one can say this: The attempt to understand

the quantum theoretical description of the individual systems leads to

unnatural theoretical interpretations, which immediately become

unnecessary if one accepts the view that the description refers to the

system as a whole and not to the individual system. The whole approach to avoid 'physical-real' becomes

superfluous. [Es wird dann

der ganze Eiertanz zur Vermeidung des ‘Physikalisch-Realen’ überflüssig.]

However, there is a simple physiological reason why this obvious

interpretation is avoided. If statistical quantum theory does not pretend to

describe completely the individual system (and its temporal sequence),

then it seems inevitable to look elsewhere for a complete description of

the individual system. It would be clear from the start that

the elements of such a description within the conceptual scheme of the

statistical quantum theory would not be included. With this, one would

admit that in principle this scheme can not serve as the basis of

theoretical physics.”

[1] A. Einstein, Out of

my later years. Phil Lib.

According

to the Copenhagen interpretation of 1927, the probability character of

quantum theoretical predictions is not an expression of the imperfection

of the theory, but of the essentially indeterministic (unpredictable)

character of quantum physical natural processes. Furthermore, the "objects

of formalism" "replace" reality without possessing a

reality of its own.

The

In

the time after the Second World War, the Copenhagen interpretation had

prevailed, in textbooks was now only the Heisenberg-Bohr quantum theory

without critical comments to find.

___________________________________________

Brigitte Falkenburg writes in Particle Metaphysics: A Critical Account of Subatomic Reality (2007) among other things… "It must be made transparent step by step what physicists themselves consider to be the empirical basis for current knowledge of particle physics. And it must be transparent what the mean in detail when the talk about subatomic particles and fields. The continued use of these terms in quantum physics gives rise to serious semantic problems. Modern particle physics is indeed the hardest case for incommensurability in Kuhn’s sense."… Kuhn 1962. 1970 …"After all, theory-ladenness is a bad criterion for making the distinction between safe background knowledge and uncertain assumptions or hypotheses." … "Subatomic structure does not really exist per se. It is only exhibited in a scattering experiment of a given energy, that is, due to an interaction. The higher the energy transfer during the interaction, the smaller the measured structures. In addition, according to the laws of quantum field theory at very high scattering energies, new structures arise. Quantum chromodynamics (i.e. the quantum field theory of strong interactions) tells us that the higher the scattering energy, the more quark antiquark pairs and gluons are created inside the nucleon. According to the model of scattering in this domain, this give rise once again to scaling violations which have indeed observed.44 This sheds new light on Eddington’s old question on whether the experimental method gives rise to discovery or manufacture. Does the interaction at a certain scattering energy reveal the measured structures or does it generate them?" 44 Perkins 2000, 154; Povh et al 1999, 107 – 111 …"It is not possible to trace a measured cross-section back to its individual cause. No causal story relates a measured form factor or structure function to its cause"… …"With the beams generated in particle accelerators, one can neither look into the atom, nor see subatomic structures, nor observe pointlike structures inside the nucleon. Such talk is metaphorical. The only thing a particle makes visible is the macroscopic structure of the target"… …"Niels Bohr’s quantum philosophy…Bohr’s claim was that the classical language is indispensable. This has remained valid up to the present day. At the individual level of clicks in particle detectors and particle tracks on photographs, all measurements results have to expressed in classical terms. Indeed, the use of the familiar physical quantities of length, time, mass and momentum-energy at a subatomic scale is due to an extrapolation of the language of classical physics to the non-classical domain."...

The

Quark Parton Model (QPM), developed by Richard

Feynman in the 1960s, describes nucleons as the composition of basic

point-like components that Feynman partons called. These components

were then identified with the quarks, postulated by Gell-Mann and

Zweig at the same time a few years earlier. According to the

Quark-Parton Model, a deep inelastic scattering event (DIS deep

inelastic scattering) is to be understood as an incoherent

superposition of elastic lepton-particle scattering processes. A cascade of interaction conjectures, approximations,

corrections, and additional theoretical objects subsequently "refined"

the theoretical nucleon model. A fundamental (epistemological) problem is immediately

recognizable. All experimental setups, implementations, and

interpretations of deep elastic scattering are extremely theory based. Fundamental contradictions exist at the theoretical

basis of the Standard Model of particle physics, which, despite better

knowledge, are not corrected. An example:

The nonexistent spin

of quarks and gluons

A landmark, far-reaching wrong decision was made in

1988.

The

first assumption was, due to the theoretical specifications of the

mid-1960s that in the image of the SM the postulated proton spin is

composed to 100% of the spin components of the quarks. This assumption

was not confirmed in 1988 in the EMC experiments. On the contrary,

much smaller, even zero-compatible components were measured (ΔΣ =

0.12 ± 0.17 European Muon Collaboration). Also the next assumption

that (postulated) gluons contribute to the proton spin did not yield

the desired result. In the third, current version of the theory,

quarks, gluons (...virtual Quark-anti-Quark pairs if one wishes too)

and ... their dynamical-relativistic orbital angular momentum generate

the proton spin.

On

closer inspection, the second readjustment has the „putative

advantage” that the result in the context of lattice gauge

theory and constructs, such as "pion clouds",

algorithmically "calculated", can’t be falsified. But

this purely theoretical based construction obviously does not justify

the classification of quarks as fermions. No matter how the

asymmetrical ensemble of unobservable postulated theoretical objects

and interactions is advertised and will be advertised in the future,

the quarks themselves were never "measured" as spin-½

particles.

Summary

in simple words: It is possible to create a theory-laden ensemble of

Quarks and “other” theory objects and their postulated

interactions, but the Quark itself - as an entity - has still no

intrinsic spin -½ in this composition. That means that Quarks

aren’t fermions, no matter what the actual theoretical approach

would be! This is a basic, pure analytical and logical statement.

Generally

speaking: If one postulates a theoretical entity with an intrinsic

value but one discovers that one needs to add theoretical objects and

postulated interactions to get the desired intrinsic value, one has to

admit that ones entity has no physical characteristic as such.

Further more:

In sum, the quark masses postulated according to the SM

do not yield the nucleon masses by far. Gluons are massless.

Postulated Up-Quark mass: 2.3 ± 0.7 ± 0.5 MeV / c²

up (u)

Postulated down-quark mass: 4.8 ± 0.5 ± 0.3 MeV / c²

down (d)

938,272 0813 (58) MeV / c² Proton mass duu ~ 0,8 -

1,2% (!!!) Quark mass fraction

939,565 4133 (58) MeV / c² neutron mass ddu ~ 1,1 -

1,4% (!!!) Quark mass fraction

Thus, also heavy ions composed of protons and neutrons

(such as lead or gold nuclei) can not be represented by quarks and

gluons. This means that according to the principle of mass-energy

equivalence, nucleons and, ultimately, heavy ions consist almost

entirely of phenomenologically indeterminate binding

energy. Even more complicated is the fact that the ions are

accelerated to almost the speed of light before they collide. This

means that there is also a considerable amount of external energy

added to the binding energy. Neither the theory of relativity neither

the SM does tell us how these phenomenologically can be divided into

translational energy and "mass equivalence."

Protagonists of the SM are so convinced of their belief

that they have obviously lost sight of the essential. Why should a

postulated complex, multi-object-asymmetric, charge-fragmented,

dynamic substructure create a spin value ½ and an elementary charge

of exactly 1·e over

dynamic states in the temporal or statistical mean? The comparison

with the SM point-postulated, "leptonic" electron, with spin

value ½ and elementary charge 1·e, which are "created" without "dynamic effort"

and structure, identifies the quarks-gluon thesis as a fairy tale.

The Quark Parton Model describes a complex theory situation and associated theory-laden (high-energy) experiments, the understanding of which is characterized by diverse aspects and a whole series of postulates. How the (here relatively simply described) nested theory-experiment-construct looks like, see representative the explanations (part 1 -3) of the Institute for Nuclear and Particle Physics Dresden. Part1 fails early on the interpretations of the presented model based on "theory-internal" assumptions. ... "This is remarkable, especially the neutron as an electrically neutral particle should have vanishing magnetic moment. This already indicates that protons and neutrons are not point-like, but have an internal structure..." Is the magnetic moment of the neutron really evidence for a substructure? Or is this assumption just a theory-laden measurement interpretation of the Standard Model? The interpretation of a neutron-quark (sub)structure loses its meaning if one examines the situation numerically (independent of the thought model). The supposedly anomalous magnetic moments of electron and proton as well as of the neutron are finally a combination of the "semi-classical" - most simply calculated - "normal" magnetic moments and measurement-inherent contributions, which originate from the magnetic field, which is used for the measurement. This qualitative statement can be concretized (in accordance with the measured values). See further the remarks on the neutron from the point of view of a mass-radius-coupled model, with which both the mass and the magnetic moment of the neutron can be calculated from the neutron-forming interaction of electron and proton in a phenomenologically understandable and exact way. The quark-parton model is an excellent teaching piece from the series of creeds (like once the epicycle or phlogiston theory). Experimental result interpretations led to some theoretical assumptions, which then quickly reach a substructured complexity by means of further interpretations with the help of new theory objects and postulated interactions, which finally eliminate any inappropriate experimental results by appropriate measures. First there were the postulated quarks. These required a fragmentation of the elementary electric charge. Then followed gluons, since quarks did not provide corresponding spin contributions of the nucleons. After that, so-called "sea quarks" were introduced as a supplement, since the postulated gluons also did not provide a corresponding spin contribution. Details of the "established" nucleon model see part 2 and part 3 (The Quark-Parton-Model) ... The analysis of the believers ends then with the belief finale ... "The nucleon is therefore built up from valence quarks, sea quarks and gluons. But for the properties of the nucleon (and all hadrons) like charge, mass and spin only the composition of the valence quarks is responsible. Therefore one often speaks of constituent quarks and means the valence quarks plus the surrounding cloud of sea quarks and gluons. Sea quarks and gluons do not contribute to the (net) quantum numbers, but they do contribute to the mass of the hadron, since they carry energy and momentum. While the bare mass of the valence quarks is only a few MeV/c², the constituent quarks carry the respective fraction of the hadron mass, in the case of the nucleon thus about 300 MeV/c². This is thus predominantly contributed by the gluons and sea quarks. In other words, the vast majority of the hadron masses (and thus the visible mass in the universe) is not carried by the bare masses of the constituents, but is dynamically generated by the energy of the interaction!" Isn't that nice. There are clouds and naked and the God-analogous indeterminate, which is about 99%, given the postulated masses of the "matter-forming" quarks. But here the faith fairy tale is not really at an end. In a bigger picture (ΛCDM-model) it is now postulated that the visible mass is again only a small part of the mass in the universe. For the most part, for today's Standard Model believers, the universe consists of Dark Matter and Dark Energy. Dark stands here for not detectable. No miracle that the Vatican invites year after year highly delighted to the science prayer. That neither the preachers nor the people a light rises has Theodor Fontane so formulated: We are already deep in decadence. The sensational is valid and only one thing the crowd favours even more enthusiastically, the bare nonsense."

The "fragmentation of matter" as an »end in itself« of mathematical theories and the inevitable increase of irrelevant knowledge, especially in the form of virtual particles, has become established standard thinking. Instead of simplification, the concepts of formal postulations and "refining theories" obviously do not end in the growth of knowledge but in scientific arbitrariness. Mathematical-based fundamental physics urgently requires a natural-philosophical oriented regulation.

You might consider the following notes about neutrinos… There is no single direct neutrino proof. They are always strongly theory-laden interpretations of experimental results. To generate the fermion masses by coupling the fermions to the Higgs field, the following conditions must be met: The masses of the right-handed and left-handed fermions must be equal. The neutrino must remain massless. This basic condition is in blatant contradiction to neutrino oscillations (Nobel Prize 2015), which require neutrino masses. Consequence: Either we say goodbye to the Standard Model of particle physics (SM) or to neutrinos with mass. For the sake of completeness, it should be mentioned that ...since one does not want to part with the Standard Model, there is always the possibility of "somehow" incorporating neutrino masses within the framework of mathematical additions. However, this is only possible because the construct of the SM has no verifiable real-physics reference at all, i.e. all SM-associated alleged object proofs are indirect, strongly theory-laden experimental interpretations. Energetically, no binding process of the transformation of a d-quark into a u-quark exists. Independent of the SM-constructed lepton number, the following should be remembered: The neutrino was introduced historically because the energy spectrum of the (emitted) electrons does not show a discrete but a continuous distribution. But if the anti-electron neutrino, with whatever lower mass limit, "kidnaps" the "missing" energy from the laboratory system and can only "act" (effectively*) through the Weak Interaction, then this simply means that a kinetic energy continuum of the postulated neutrino already had to exist during the process of the Weak Interaction. Because after this process, according to the postulate, there is no further possibility of interaction. But how is this to be explained phenomenologically? The terse statement of the SM that the beta-minus decay of the neutron takes place according to the conversion of a d-quark into a u-quark by means of a negatively charged W-boson says nothing about the concrete process of how, from where and why the anti-electron neutrino now absorbs different amounts of energy during the weak interaction in order to compensate for the "missing" energy in the electron spectrum. On closer inspection, the situation is far more complex, since both postulated quark-based neutron and quark-based proton consist of ~ 99% of undefined binding energy and thus the weak interaction (energetically) affects only ~ 1% of the decay process. The postulated mass of the d quark is 4.8 (+0.5 / - 0.3) MeV/c², the mass of the u quark is 2.3 (+0.7 / - 0.5) MeV/c² quark masses : http://pdg.lbl.gov/2013/tables/rpp2013-sum-quarks.pdf This means that the mass difference is between 1.5 and 3.5 MeV/c². The electron anti-neutrino with a mass (lower limit) of ≤ 2.2 eV/c² can absorb a maximum of ~ 0.78 MeV. According to the electron energy spectrum, however, the average energy is much smaller than 0.78 MeV, which is "abducted from the laboratory system" by the neutrino. What happened to the missing weak interaction energy? "Gluonic binding energy" it could not have become, since gluons do not participate in the postulated transformation of a d-quark into a U-quark. Nor can the virtual magic of the ~ 80.4 GeV/c² W boson absorb anything in real-energy terms. Here, it is more true than ever for the prevailing physics: "It is important to realize that in physics today, we have no knowledge of what energy is."... So in the picture of the SM we have initially 1 u-quark and 2 d-quarks, in the meantime a W-boson and ~ 99% binding energy (whatever that should be phenomenologically) and after the transformation 2 u-quarks, 1d-quark, 99% binding energy, 1 anti-electron neutrino, 1 electron and additionally ~ 0.78 MeV energy. No matter how the energy distribution possibilities of the ~ 0.78 MeV to proton (2 u-quarks, 1d-quark, 99% binding energy), electron and anti-electron neutrino look like, the process of the weak interaction would already have to be such that these distribution possibilities are guaranteed, since after the weak interaction no more energy transfer of the neutrino to the proton and electron is possible. This means, however, that there can be no discrete transformation process of a d quark into a u quark. Non-discrete means here in particular: Energetically, there is no binding process of the transformation of a d-quark into a u-quark. From a neutron-u quark, energetically different electron-anti-neutrinos are created by the weak interaction, since after the creation, other interaction possibilities apart from the weak interaction are excluded. Thus, there is no energetically unambiguous transformation of a u quark into a d quark. Detached from this problem, it should also be remembered for the sake of completeness that experimentally we are not dealing with single objects but with many-particle objects (more than 1 neutron) and accelerated charges radiate energy. It is probably not to be assumed that the protons and electrons created "suddenly" exist with a constant speed. "Where" is the associated photon spectrum of beta-minus decay? What does it look like?

According to SM, neutrino research "means", for example: ...One measures the currents of the kaons and pions and indirectly determines the flux of the neutrinos... However, pions (π π π+) and even more so kaons (K+, K-, K0, K0) are highly constructed entities (for initial understanding see https://en.wikipedia.org/wiki/Kaon , https://en.wikipedia.org/wiki/Pion ) of the Standard Model. Means: The number of existence postulates, such as mass, charge, spin, flavour(s), lifetimes and quark composition is already "considerable". The possible transformations result in "manifold alternation-interaction-fantasy scenarios”. Furthermore: The neutral kaon is not its own "antiparticle", this leads (more generally) to the construction of the particle-antiparticle oscillation (see https://en.wikipedia.org/wiki/Neutral_particle_oscillation ) and the neutral kaon is said to exist in two forms, a long-lived and a short-lived form. To conclude from this that there are properties of "flavor-oscillating" neutrinos increases the arbitrariness even more. To understand all this (reproducibly), one needs absolute faith in axiomatic creations. As a reward, however, there is then the carte blanche that any experimental result becomes "explainable" ...that until then - in connection with the "experimental side" - we have to make, roughly estimated, several dozen further result-oriented assumptions, ... does not matter to SM-believers. To quote Egbert Scheunemann: "They first shoot an arrow at a barn door, then paint concentric circles around the bullet and cheer about their "bull's eye". Without question, ad hoc hypotheses and thought experiments were and are fundamental concepts. It is just that "physical" theses must sooner or later be substantiated by stringent, consistent “models of thought” using simple mathematical means, if they are to have any epistemological value. The bad habit of iteratively glossing over missing knowledge and missing phenomenology within the framework of parametric creeds etc. means arbitrariness and knowledge standstill. Renormalisation, variable coupling constants, free parameters, endless substructuring, perturbation theory with an affinity to Taylor series just correct the "physical crap" that one has primarily brought upon oneself over decades through a lack of insight. By the way…At a conference in Rome in 1931, Niels Bohr expressed the view that understanding beta decay did not require new particles, but rather a similar serious overthrow of existing ideas as in quantum mechanics. He doubted the theorem of the conservation of energy, but without having developed a concrete counter-proposal…

|

|

Unfortunately there is no complete English translation for the "Elementarkörpertheorie" yet available. You'll find more detailed information if you select certain main issues from the website menu (auf Deutsch). "Feel free" to use a common webbrowser translation tool. You'll discover useful information, insights and surprising equations to deduce and calculate physical values based on mass-radius-relations such as... Sommerfeld Fine-structure constant, neutron mass, mass(es) of charged pions, mass and radius of the universe, Planck units, cosmic microwave background temperature, ...

... "for now" you'll find "here" important results and a short discription of how to gain those ...

Anatomy of anomalous magnetic moments Based on the experimental values for the magnetic moments of electron, proton and neutron, it is concluded that the magnetic field itself provides an additive, measurement-inherent contribution ∆μB to the supposedly intrinsic values.

Already for rational logical reasons, the question arises if these contributions (∆μe , ∆μp , ∆μn) have a common cause. The assumption presented here is that these contributions to magnetic moments are external magnetic field measurement-inherently contributions and are not intrinsic. This is investigated and confirmed numerically, analytically, phenomenologically as well as formally. |

to be continued...